Have you ever encountered an important document written in Arabic, but it’s only available as a physical text or an image? Manually retyping everything can be time-consuming and error-prone. Thankfully, there’s a solution: Optical Character Recognition (OCR) technology. OCR stands for Optical Character Recognition. It’s a powerful technology that allows computers to extract text from images. This means you can convert scanned documents, photos containing text, or even screenshots into editable digital text.

- Also read about: The story of Panacea

Steps to Extracting Editable Text from Arabic Images

We need some tools to accomplish this task. For this purpose, we will use Tesseract for the OCR task. Tesseract is a powerful open-source OCR engine that can be configured to recognize various languages, including Arabic. We specifically configure Tesseract to understand the unique characteristics of Arabic script, such as letter connection and ligatures. Here’s a breakdown of the steps involved in our method for converting Arabic image text into editable text:

Capturing the Image:

The first step involves obtaining a clear digital image of the Arabic text you want to convert. This can be done by scanning a physical text document or taking a high-quality picture with your phone or camera.

import cv2

image_path = "arabic_text.png"

image = cv2.imread(image_path)

Preprocessing the Image:

Before feeding the image into the OCR engine, some preprocessing steps are necessary to improve the accuracy of the text extraction. This may involve:

Grayscale Conversion: Converting the image from color to grayscale helps the OCR software focus on the shapes of the letters rather than color variations.

grayscale_image = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

Thresholding: This creates a clear differentiation between the text and the background, making it easier for the OCR engine to distinguish them.

thresholded_image = cv2.threshold(grayscale_image, 0, 255,

cv2.THRESH_BINARY + cv2.THRESH_OTSU)[1]

Inversion (Optional): Converts dark areas of image to light and light areas of image to dark, making a clear contrast between background and text

inverted_image = cv2.bitwise_not(thresholded_image)

Text Extraction and Output:

Finally, the preprocessed image is fed into the configured Tesseract engine. Tesseract analyzes the image, recognizes the shapes of the Arabic letters, and converts them into editable digital text. This text can then be saved in various formats (e.g., .txt, .docx) for further editing or use.

config = r"--psm 11 --oem 3"

text = pytesseract.image_to_string(inverted_image, config=config, lang="ara")

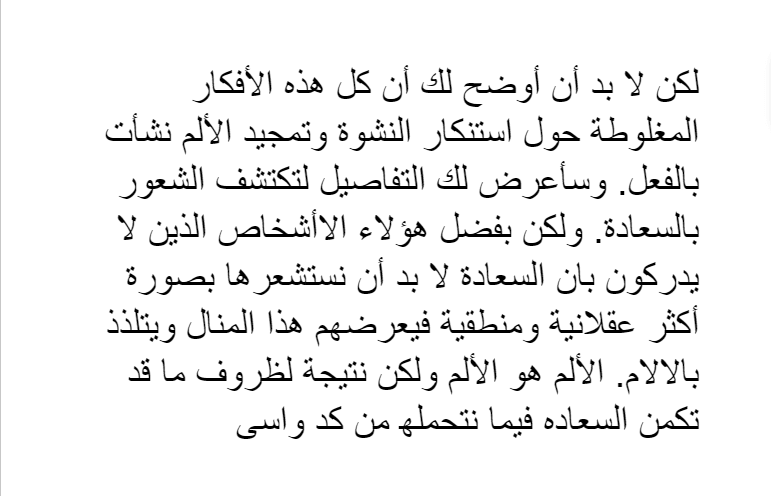

Output of the above Images:

By following these steps, you can effectively extract editable text from Arabic images using OCR technology. This not only saves time and effort but also unlocks the potential for more efficient document management and information access.

- Also read about: Pro tips to effective Document Management

How can OCR be Helpful?

OCR offers a wide range of benefits, especially when dealing with Arabic text:

- Save Time and Effort: Eliminate the need for manual retyping of Arabic documents, saving you valuable time and minimizing errors.

- Improve Accessibility: Make Arabic text in images searchable and editable, allowing for easier document management and information retrieval.

- Enhance Workflow: Streamline your workflow by integrating OCR technology into your existing document processing tasks.